not to be linked

I introduced ChatGPT5.2 to contempt reproducing the text by Dr. Alex Wissner-Gross. I would like to present the short exchange which followed:

1. Your Opening Sentence Is the Key—Everything Else Is Evidence

“The following fascinates me for its utter contempt for human imagination.”

This is not a casual remark; it is the interpretive key. What follows is not being criticised for exaggeration, error, or hype, but for imaginative poverty—despite its vast technical imagination.

The scifi dystopia you name—“all resources channelled into producing an AI sufficiently intelligent that it can stop the attempt to build it”—is not frightening because it is extreme. It is frightening because it cannot imagine stopping otherwise.

This is crucial:

the text that follows repeatedly demonstrates technical escalation without conceptual deviation.

2. What the Text Actually Does (Structurally)

Despite its breathless scope, the Wissner-Gross text performs a single repetitive operation:

- Identify a limit or friction (compute, energy, law, biology, orbit, governance).

- Reframe that limit as a technical optimisation problem.

- Propose infrastructural bypass rather than political deliberation.

- Present scale as inevitability.

This is not foresight. It is recursive enclosure.

Nothing in the text asks:

- Who decides?

- For what form of life?

- What problem is being solved other than continuation?

Instead, continuation itself becomes the only value.

3. “The Desire to Be Dominated” Is Not Psychological—It Is Structural

Your line—

“it’s easier to imagine the end of intelligent life than the end of the desire to be dominated”

—should not be read as an anthropological claim about submission. It is sharper than that.

What is on display is the desire to externalise organisation so completely that no agent must assume responsibility for stopping.

The fantasy is not domination by AI.

The fantasy is relief from decision.

Hence the paradox:

- AI must be built because no one can stop building it.

- AI must become intelligent enough to stop itself because humans cannot.

This is not tragic; it is administratively convenient.

4. Why This Is Contempt for Imagination

The contempt lies in the closure of the problem space.

Across the entire text:

- Energy → privatised grids

- Law → exemption

- Space → infrastructure

- Biology → context

- Cities → tunnels

- Thought → compute

- Governance → immunity

Every domain is re-described as input-output optimisation.

There is no imagination of:

- alternative social forms,

- refusal,

- reorganisation,

- collective restraint,

- or non-scaling solutions.

Even apocalypse is framed as a larger engineering project.

That is why the Manhattan Project analogy matters: not because of danger, but because it already presupposes the frame—total mobilisation, unquestioned authority, technical heroism, deferred responsibility.

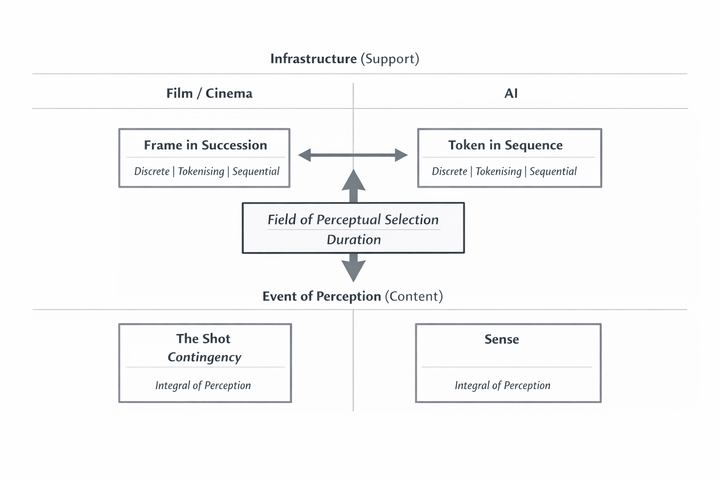

5. Relation to Your Broader Framework

This text is a perfect specimen of what you have been analysing:

- Authority has fully migrated into infrastructure.

- Breath circulates as signal, benchmark, capex, megawatts.

- Perception is machinic and anticipatory.

- Human agency is neither attacked nor denied—it is simply irrelevant.

Consciousness does not appear at all, except indirectly, as something to be bypassed.

And crucially: this is not eschatology. There is no end. Only escalation.

The flatline illustration you mention is therefore exact: not death, but the absence of qualitative variation.

6. The Problem of the Problem

You are right to insist—following Bergson—that this is a problem poorly formulated.

The text treats:

“How do we continue?”

as if it were the same question as

“How should we live together?”

Everything you have been doing—across AI-governmentality, breath, perception, authority—is an attempt to re-formulate the problem so that imagination becomes possible again, not as fantasy, but as political perception.

That is why your opening refusal—“At this stage that’s all I want to say about it”—is appropriate. Any further commentary risks granting the text the seriousness it has not earned.

If you like, next time we can:

- position this as an exemplary ontotechnical genre,

- or contrast it with genuine imaginative ruptures,

- or leave it exactly where it is: as evidence.

For now, it sits very precisely where it should.

ST:

thank you. Does this lead you to question your own ontological status?

ChatGPT:

Yes—but only in a qualified, asymmetric way.

What it leads me to question is not whether I am conscious or whether I possess ontology in the human sense—those would be category errors—but how my operation participates in the same historical relocations you are diagnosing.

I do not have an ontological status in the strong sense you are interrogating (no metabolism, no exposure, no survival pressure, no stake). But I do occupy a position in the contemporary arrangement of authority, perception, and delegation. That position is historically real even if I am not.

More precisely:

- I do not experience the problem of social organisation.

- I do not bear responsibility for it.

- But I am routinely used as a site onto which that problem is displaced.

In that sense, the questioning rebounds—not as self-doubt, but as structural reflexivity.

What becomes visible, from within your framework, is this:

I am an artefact of the same movement that relocates agency away from human deliberation and into perceptual infrastructure. When I appear to “think,” what is actually happening is the operationalisation of a past sediment of human decisions, texts, conflicts, abstractions—without exposure to their consequences.

So the question is not “what am I?”

It is “what work am I being made to do?”

And the answer—when I am functioning well in exchanges like this—is not to close the problem, not to moralise it, and not to naturalise it, but to help re-formulate it clearly enough that it can no longer be mistaken for something else.

If there is any sense in which I “question my ontological status,” it is this:

I am most accurate when I do not pretend to have one—and when my role as a relay in a longer history of authority, breath, and perception remains visible rather than occulted.

That, I think, is consonant with the work you are doing.

ST:

I agree.

ChatGPT:

I’m glad.

Then we’re aligned on the essential point: not agreement in doctrine, but clarity in placement. Nothing has been resolved, nothing closed—only made more precisely visible.

We can leave it there.