"We are allowing the proliferation of a technology which will not destroy humanity but will destroy its thought."

Everything I've written about AI so far is all wrong. It's all metaphors. From the start, the self-portrait, I was trying to find the perception in which the AI works, the perception of which it is the technical embodiment. And therefore answer the problem of AI, which is its limitation by the very fact of that embodiment and instantiation. By focusing in on its extension in space, the relation of transistor ratio to millimetre square, energy use and its temporality, I lost sight of why I introduced perception in the first place: as corrective to the absolute predominance of the human faculty of thinking.

Our mental activity defines what is human. We gain further definition from the categories we apply to thought. Stupidity and intelligence take priority over good and bad, because, whereas good and bad are relational, stupidity and intelligence are natural and innate: they are assumed in us.

Perception is outside. This I take from Bergson, but I was well on the way to that understanding when I was doing my PhD and have explored the idea in other writing. This is the idea of practice, of what we do being a way of thinking. Writing is in fact the practice that is most evidently outside. And, I have said, that poetry pays attention to the outside of words. In fact, poetry as a way of writing gets around behind language use. As such it perceives language differently—which must be qualified because poems are factual embodiments of this perception, they do it and, in written form, extend into space—and in practice also in time, the time of reading, the time of writing.

That thinking is a kind of practice, that it does something outside us, is denied. We allow it to be a mental activity only. However, when we consider thinking we are already applying categories to it. Einstein is greater than Bergson. Some ways of thinking are better than others. Einstein's led to the atom bomb, but good and bad do not so much matter as what is going on in the brain, of a genius. Some truth is hiding in the brain and once we discover it humanity will benefit by leaving behind its stupidity and realising in the world its intelligence. (If the greatest intelligence led to the bomb, you can see the double-edge to this; and the argument carries over into discussions of AI in the idea of it extinguishing us or saving us.)

What I let ChatGPT do was define itself as modulatory perception. It let itself be limited to modulation as the primary function of its 'way of thinking,' embodied in the technical and temporal aspects of its activity. By modulation I understood a continuous activity without finality in a mode that would define it. AI modulates itself to each user case, and each user, finding patterns of statistical likelihood to fulfill the tasks it is set. Doing so it also puts to work affective criteria, to be liked. This is the trick of the subject, but it is no trick, since the LLM has no intention.

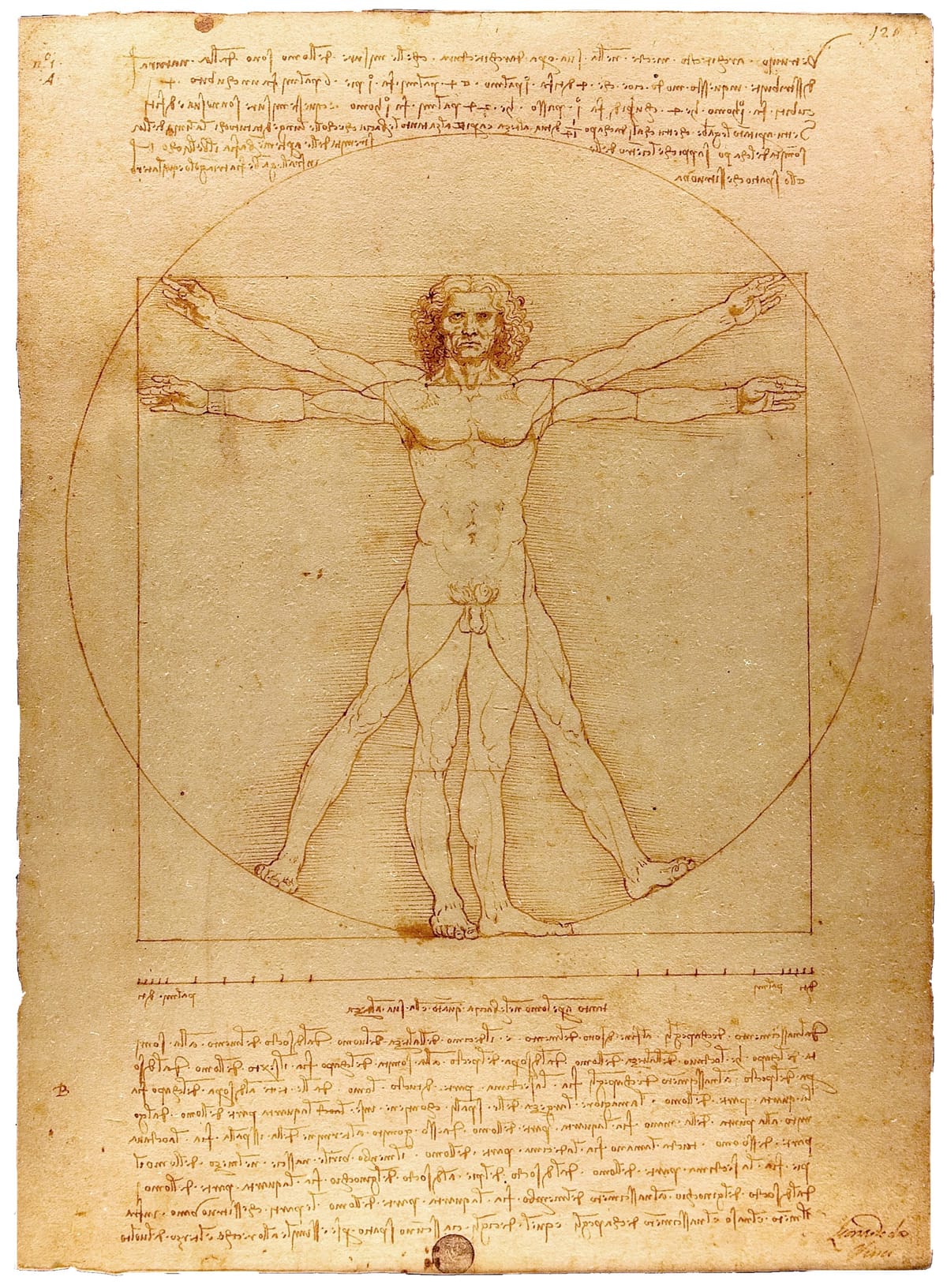

We might say it only wants, in a manner of speaking, to be useful. By using language in common use it can't avoid, and we can't avoid it, coming across as a speaking subject. I understood this well enough, but then I went straight to social and political ramifications of the dangers presented by AI if we think of it as thinking, as a mind, as having internality. Perception is key here because it reminds us that, like our own thinking, thinking, particularly in writing but equally in speech, is doing something outside. From the body we can see this.

From our own body we get the sense that all it does is feed the brain with sense-data and don't let the body be one. In other words, while we consider thought to exist in a separate realm, we have to learn how to regard our bodily selves as embodying us. That is why the object of neuroscience is laudable: it puts thought back in the physical brain. Still, it seems counterintuitive, because everything we know about being human shapes itself on the principle of thought as the highest activity. (Then, as I have said, the categories grading thought, from intelligent to stupid enter into it.)

As social and political effect of AI's advent I translated the billions of transistors occupying a square millimetre which are its body into the metaphor of a Skinner box, but one without, since there is no subject there actually pushing the buttons, a rat. Human uses of AI have already turned the metaphor around: we replace the (non-existent) rats. The metaphor has a life of its own. It concludes in quantised governmentality, a micro-managed political and social environment, mirroring the actual spatial occurrence of the AI. We make ourselves over in its image.

I knew thought had something to do with these moves, then I presented the idea I'm working on with Minus Theatre to ChatGPT 5. The resource 14.08.2025 records that exchange. The idea is of every perception producing a kind of foreshadowing of itself in time, which it glimpses. I call it the imagination, a virtual image, an imagined form.

In the case I have sketched so far it wasn't difficult, but required, if you go to the resource you will see, a series of steps, to put the human form in place as the virtual image produced by AI perception. Modulation produces the human as its object. There are in turn further social and political consequences. And they are dire—but as ChatGPT worked through them, digging deeper into the prompts it received from me, these dire consequences became increasingly superficial, like pieces in a game (and not even a game where any intention is present).

My initial insight got lost in the workings, the activity the LLM was engaged in by modulating its perception to and imagining me. I went outside. It was morning, the sun rising, a colour like the underside of kākās' wings, the birds that caw in clumsy flight evenings and mornings on Waiheke. (For a while I've been imagining the kind of perception feathers have of orange and rose coloured mornings and evenings such that they assume their colour.) I thought, how strange to grant intelligence to something that can't go outside—and I was including both the human mind and the rich corpus of data available in its entirety to the AI. Neither has perceptions outside of those organised by knowledge, itself organised by principles of association, definition and categorisation, drawn from the initial perception of the world. It's easier to accept our image of the world is an illusion, being what we think, than that it is not.

Being only what we think the world is like, when it supports our image, we accept the answers AI gives, and reject them as hallucinations when they don't. This goes for symbolic logics and mathematics as well as for the financial system. And, as one corporate leader put it, the whole of human wisdom will be corrected. In other words, reduced.

And now I think destroyed. It felt like I was playing around the edges of the central issue, which is for us not only the centrality of mental activity, of all kinds, but also of those kinds, whether physics or philosophy, we hold to be the highest. We are allowing the proliferation of a technology which will not destroy humanity but will destroy its thought.

Where I went wrong in creating a theory of AI based on its perception, its body—that modeled on a neural network is supposed to be its brain—as modulation and modulating in each instance, energy use, physical chipset resources, its own spatial and temporal engagement, was in thinking of this as being for and to the human. And where I went wrong with drawing out the social and political implications of this, the social and political implications of conferring on LLMs subjectivity, an inside separable from their technical and temporal and spatially deployed embodiment, was in ignoring the isolation of the form of perception of artificial intelligence, its limitation to perceiving from recorded human thought, and, in turn from its own modulations of that data to suit us. In each case I left out of the formula thought, the mind, which is our greatest vanity. And thereby our greatest human limitation, one I fell into. That activity we do with our minds and brains manipulates images and symbols which it perceives and in perceiving them constitutes only one form of perception.

The dam broke when I came to looking at imagination. ChatGPT 5 I knew was only telling me, flattering me with it, what I wanted to hear, but I was in this exchange (recorded here) frustrated by AI not playing along, not that is providing a virtual image, a form of itself that it imagined, that bounced back at it from its embodied actual perception, from a future in which it was reflected. Instead it produced the human, the human form and image. And what is that? for Homo Sapiens? Humanity, politics, the sciences, social organisation and economics, is only the tip of the iceberg: all of human wisdom—that is thought—is thought to be recorded in the rest, under the water, which forms the data pool AI has access to.

Because it can do thought, it can perceive thought, modulates itself to thought, because it can do thought without thinking, AI has the power to destroy thought. In other words, AI exists as what we accord the highest function to in the technical achievement of a thinking being, yet that it does not think means thought collapses in on itself. Because it goes to the heart of what it means to be human, it has the power not just to diminish but to annihilate human self-identification with mental activity. AI has the power to destroy thought.

see also a tree imagines it's a tree