Living in the Time of Artificial Intelligence

The following is a response to Steve Rose's interview with Demis Hassabis, "Demis Hassabis on our AI future: ‘It’ll be 10 times bigger than the Industrial Revolution – and maybe 10 times faster’," in the Guardian, 4 August 2025.

It is said that AI is as good the dataset available to it, which is measured in size: AI is as good as the dataset is big. It should be added, it is as good as the dataset of all data available to it in each instance, measured in "milliseconds of concurrent transistor activity." (see endnote of "On AI") AI is open to no other, that is AI perceives no other, dataset than this.

Perhaps we can put aside Bergson's insight of perception being outside, not therefore inside or a product of the neurosensory system, whether that belongs to humans or other animals and animalcules, and deal instead with more commonly understood notions of what is closed and what is open. A neural pathway is said to be open when it conveys the information given by the senses and so the brain can be said to be open, insofar as it is open to new stimuli, new data. The brain is open to all that the senses can convey and so learns to self-correct. Associations made in the past, or out of habit, are able to be supplanted by fresh associations, according to the new data, so that our view of reality is constantly refreshed—in theory, anyway.

In fact, we are more organised by habitual associations acquired over time than by any instantaneous input of fresh data. It takes time to change our minds, but this doesn't change the fact of being open in principle. It reinforces the idea through giving it a basis in time: while what is acquired over time may hold, direct sense data given by perception can change what we previously thought was true.

AI in contrast is open only to the existing dataset of what is given all at once, in the milliseconds of concurrent transistor activity. It has no body so in the truest sense of physics we can say it doesn't know physics. Is it a matter of how we define knowledge to ask then if it knows anything at all? No, it's a matter of access, but it would be a mistake to define knowledge as a matter of access.

Even if we allow all knowledge to enter into the dataset available to AI at any one instant and if we allow that there is no constraint on its access, this is a closed system, which knowledge is not. We can say however that it is open in respect of access and in respect of intervention: we can revise our instruction and it can modulate its response. These aspects of its operation enter too into the concurrent transistor activity of how it organises the data available to it, so that it can seem to revise its opinion. Equally, not because the dataset contains false information, it can hallucinate and, equally, confirm us in opinions that are false.

It can hallucinate because the number of transistors available to concurrent activity is limited, which limits its field of reference. Like the milliseconds of activity, this too is measurable, as a function of how many transistors in a square millimetre of the chipset it uses there are available to it. In the case of the Nvidia's B100 104bn.

Surely however it's the higher level organisation of data where the resolution of data as knowledge occurs. This would be the case apart from the fact that the same concurrent activity in the chipset is engaged in applying the algorithms which organise and hierarchise bare data into statistically valid results, results which, according to human standards of knowledge, are viable. The AI cannot tell, apart from the dataset available to it at the time of its activity, what is humanly viable and what is not. It has only statistical validity to go on for the given set.

The same goes for comparing statistical blocs, different sets of data. The higher level statistical algorithms involved do not belong to any other field of reference than that framed by the set parameters. We may like to think of the dataset as being heterogeneous and internally differentiated however the difference between any two data is binary: it is either / or. Heterogeneity and a differentiated field to compare data from different sources would require and.

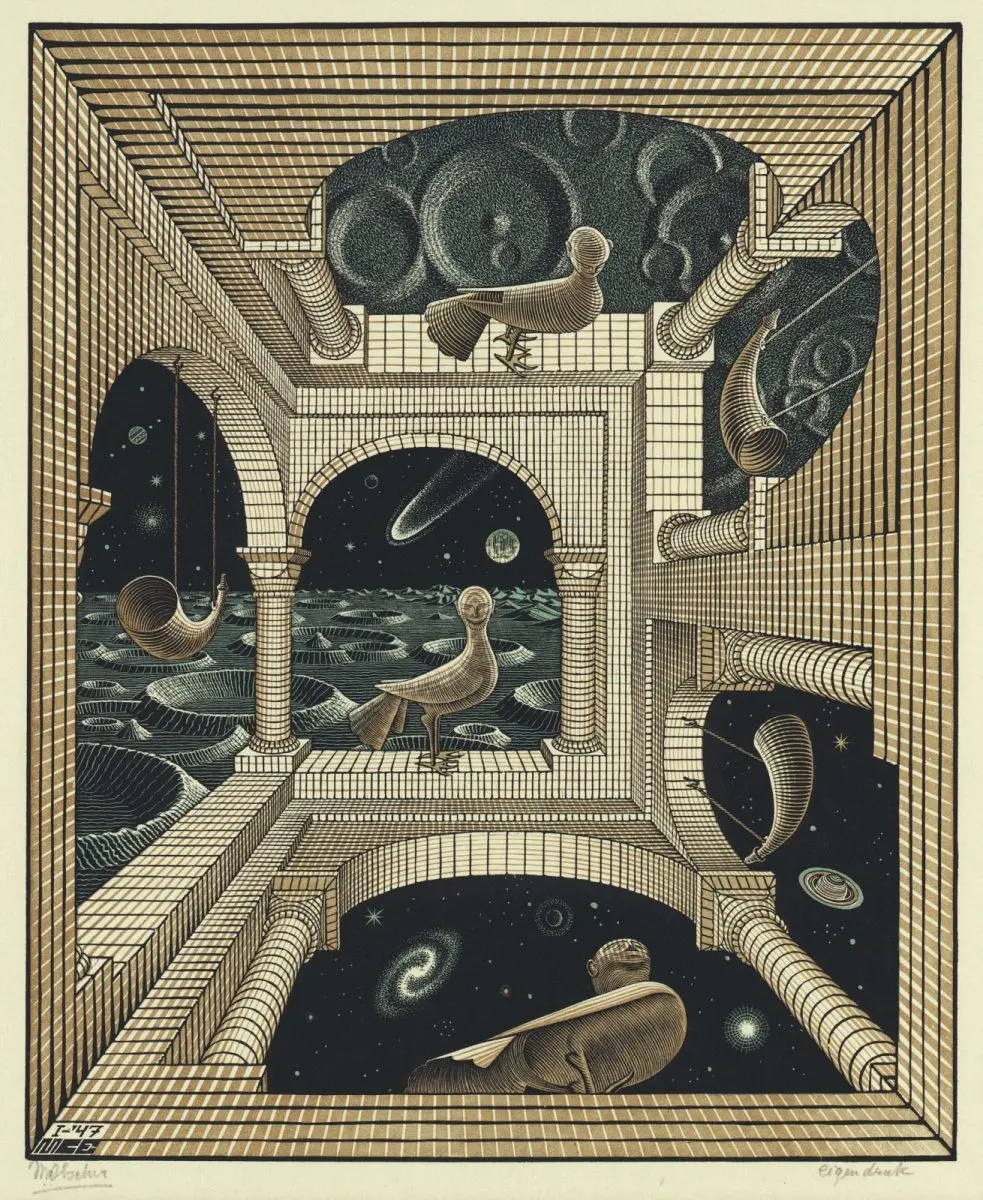

When we consider the overall field open to AI in the instant, which is at the same time closed for that instant of activity, we can imagine a series of switches, or, as I do in the first part of On AI, as Skinner boxes—without operants to be conditioned, the rats. (I asked ChatGPT 4o about this metaphor, in unpacking the rat in the box, and from the existing set of data available to it in that instance, still, its response was surprising, which only shows that the response is 'out there' in the discursive field of reference.) The switches correspond to either this or that, so each may be said to confer existence on either one or the other fact. Chains of switches, switches serialised by other chains according to the operations of algorithms calculating statistical probability, produce the field of perception for the AI's milliseconds of concurrent activity. It is the entire extent of the AI's perception.

We can further extrapolate from this a way of governing space, with the statistical algorithms providing the laws. We can say that AI consists in a new breaking up of a governable space, as a closed set. It is a quantised form of government, government according to artificial intelligence, which submits us both to its laws and to its partitioning of space down to billionths of square millimetres.

We can say this because, by making human knowledge and language AI's field of reference, we have remade ourselves in the image of its field of reference—and inhabit, as Mark Zuckerberg has imagined it, an area almost the size of Manhattan, where those laws are maintained, every square millimetre of which contains over a billion switches, with each deciding this or that exists. We can only do this by submitting to the idea that AI's time and ours concur, as concurrent milliseconds of transistor activity, and by refusing the idea that the fields of reference which our human perception gives us are infinite, because open.

See AI-authored review: "Living in the Time of Artificial Intelligence."

See also the resource sketch for Part III: On AI Governmentality generated by ChatGPT 4o